1. Quadrics network

2. Worldwide Inter operatibility for Microwave Access

3. Fpga offloads dsp?s.

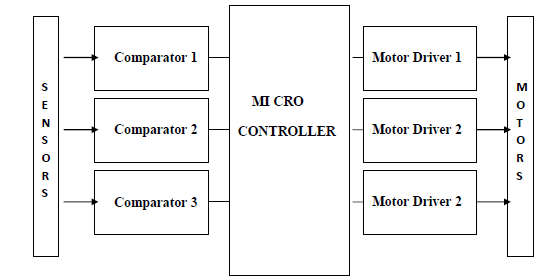

4. Real-Time Obstacle Avoidance

5. Light emitting polymers

6. E-Commerce

7. Extreme ultraviolet lithography*

8. Low Power UART Design for Serial Data Communication

9. Multi threading microprocessors

10. Passive Millimeter-Wave

11. Magnetic Resonance Imaging

12. Microelectronic Pills~

13. Multisensor Fusion and Integration

14. Molecular Electronics

15. Money Pad, The Future Wallet

16. Treating Cardiac Disease With Catheter-Based Tissue Heating

17. Adaptive Multipath Detection4

18. Heliodisplay

19. Virtual Reality~

20. Real Time System Interface

21. Wireless LED

22. Real-Time Image Processing Applied To Traffic

23. Class-D Amplifiers

24. Radiation Hardened Chips

25. Time Division Multiple Access

26. Embryonics Approach Towards Integrated Circuits

27. Cellular Digital Packet Data (Cdpd)

28. EC2 Technology

29. Crusoe Processor

30. Swarm intelligence & traffic Safety

31. Software Radio3

32. Integrated Power Electronics Module

33. Power System Contingencies

34. e-Paper Display

35. VISNAV

36. Push Technology

37. Distributed Integrated Circuits

38. Electronics Meet Animal Brains

39. Navbelt and Guidicane

40. Orthogonal Frequency Division Multiplexing

41. Organic LED

42. Optical networking

43. Tunable Lasers

44. Code Division Duplexing

45. Satellite Radio TV System

46. Code Division Multiple Access

47. Project Oxygen

48. Robotic balancing..

49. Integer Fast Fourier Transform

50. Daknet

51. Cryptography~

52. 3- D IC's

53. Continuously variable transmission (CVT)

54. Fibre Optic Communication~

55. AC Performance Of Nanoelectronics

56. Continuously variable transmission (CVT)

57. Intel express chipsets.

58. Military Radars

59. Moletronics- an invisible technology

60. Significance of real-time transport Protocol in VOIP

61. Acoustics

62. Testing cardiac diseased based on catheter based tissue heating

63. Cellular Through Remote Control Switch

64. Touch Screens

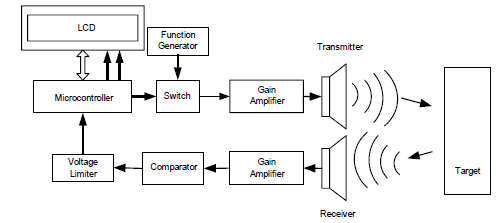

65. Implementation Of Zoom FFT in Ultrasonic Blood Flow Analysis

66. FRAM

67. The Bionic Eye

68. Synchronous Optical Network

69. Satellite Radio

70. Nanotechnology

71. Fault Diagnosis Of Electronic System using AI

72. Asynchronous Chips

73. E-Nose

74. Holographic Data Storage

75. MILLIPEDE7

76. Crystaline Silicon Solar Cells

77. Space Robotics

78. Guided Missiles

79. Synchronous Optical Networking

80. Cyberterrorism

81. Plasma Antennas

82. Welding Robots

83. Laser Communications

84. Architectural requirements for a DSP processer

85. High-availability power systems Redundancy options

86. Utility Fog

87. GMPLS

88. DSP Processor

89. e-governance.

90. Smart Pixel Arrays

91. The mp3 standard.

92. Resilient Packet Ring RPR.

93. Fast convergemce algorithms for active noise control in vehicles

94. Thermal infrared imaging technology

95. HAAPS

96. ISO Loop magnetic couplers

97. Evolution Of Embedded System

98. Guided Missiles

99. Iris Scanning

100. QoS in Cellular Networks Based on MPT

101. Vertical Cavity Surface Emitting Laser

102. Driving Optical Network Evolution

103. Home Audio Video Interpretability (HAVi)

104. Sensotronic Brake Control

105. Cruise Control Devices

106. Zigbee - zapping away wired worries

107. Global Positioning System~

108. Passive Millimeter-Wave

109. High-availability power systems Redundancy options

110. Light emitting polymers

111. Advanced Mobile Presence Technology

112. Resilient packet ring rpr.

113. Electronic Road Pricing System~

114. CorDECT

115. Artificial neural networks based Devnagri numeral recognitions by using S.O.M

116. Dig Water

117. Fusion Memory

118. Military Radars

119. Satellite Radio TV System

120. Landmine Detection Using Impulse Ground Penetrating Radar

121. low Quiescent current regulators

122. Stream Processor

123. Wireless communication

124. Object Oriented Concepts

125. Internet Protocol Television

126. RTOS ? VXWORKS2

127. MOCT

128. VLSI Computations

129. Terahertz Transistor

130. Integer Fast Fourier Transform

131. Surface Mount Technology

132. The Vanadium Redox Flow Battery System5

133. Terrestrial Trunked Radio

134. Fuzzy Logic

135. Dual Energy X-ray Absorptiometry

136. Cellular technologies and security.

137. Automatic Number Plate Recognition

138. Turbo codes.

139. CRT Display

140. HVAC

141. Ultra wide band technology.

142. GPRS

143. Optical Switching

144. VCSEL

145. Organic Light Emitting Diode

146. Orthogonal Frequency Division Multiplexing

147. Time Division Multiple Access

148. Elliptical curve cryptography ECC

149. Service Aware Intelligent GGSN

150. Space Time Adaptive Processing

151. Wireless LED

152. Blast

153. Radio Astronomy

154. Quantum cryptography

155. Organic Electronic Fibre

156. Fundamental Limits Of Silicon Technology

157. Digital Audio's Final Frontier-Class D Amplifier

158. Bluetooth based smart sensor networks

159. Optical Camouflage